- Overview

- Getting Started Guide

- UserGuide

-

References

-

ABEJA Platform CLI

- CONFIG COMMAND

- DATALAKE COMMAND

- DATASET COMMAND

- TRAINING COMMAND

-

MODEL COMMAND

- check-endpoint-image

- check-endpoint-json

- create-deployment

- create-endpoint

- create-model

- create-service

- create-trigger

- create-version

- delete-deployment

- delete-endpoint

- delete-model

- delete-service

- delete-version

- describe-deployments

- describe-endpoints

- describe-models

- describe-service-logs

- describe-services

- describe-versions

- download-versions

- run-local

- run-local-server

- start-service

- stop-service

- submit-run

- update-endpoint

- startapp command

- SECRET COMMAND

- SECRET VERSION COMMAND

-

ABEJA Platform CLI

- FAQ

- Appendix

Autoscaling feature of HTTP service

Introduction

This document describes the HTTP service autoscaling specification.

Parameters that can be set for auto scale

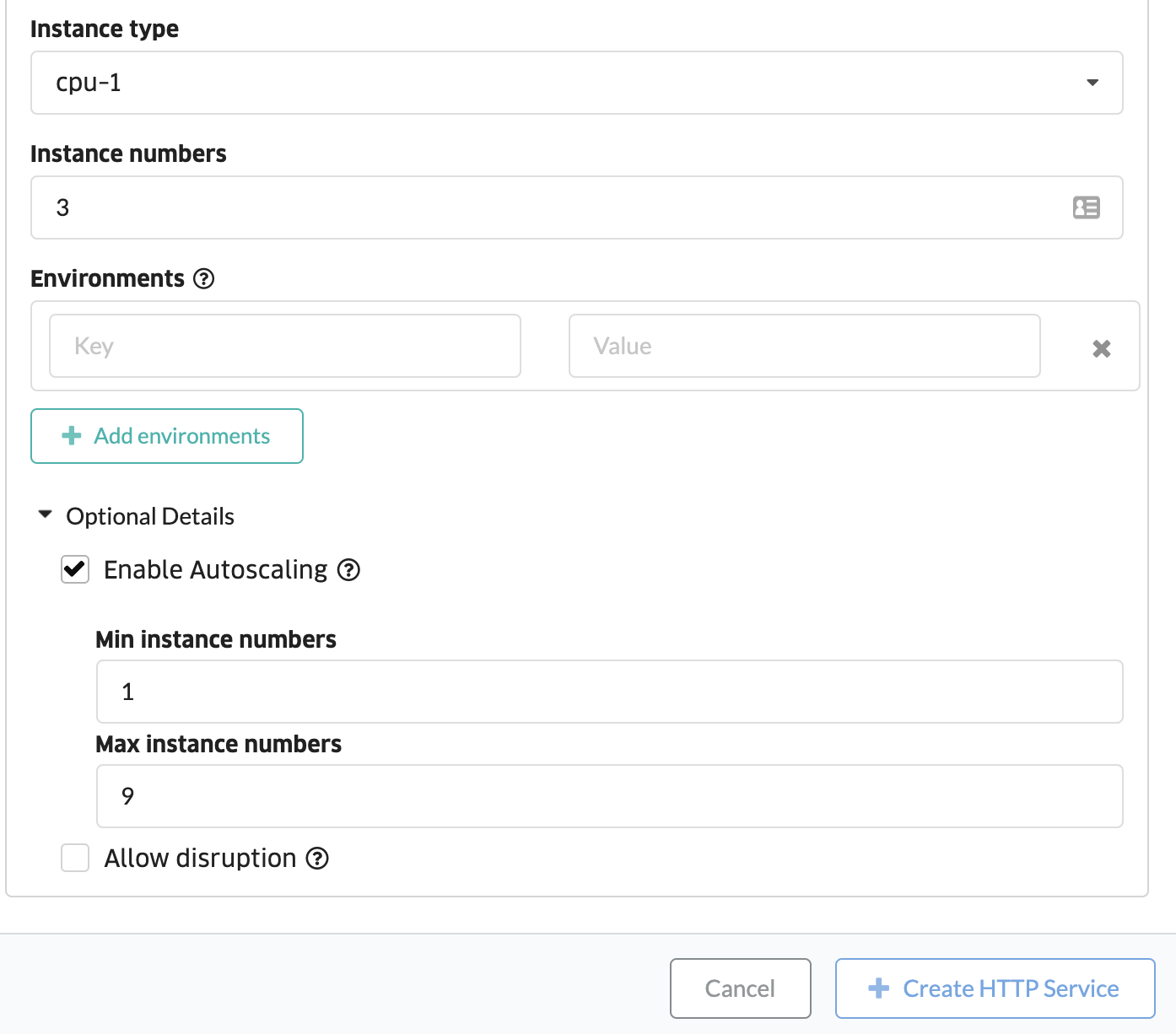

When you create an HTTP service, you can configure whether or not to autoscale the number of instances. Autoscaling is performed based on the CPU load of the instances. When creating the HTTP service, click on “Optional details” and enter the parameters for autoscaling. You can specify the following parameters.

- Enable autoscaling: Check to enable auto scale.

- Instance numbers: The number of instances when it is first started.

- Min instance numbers: Minimum number of instances at autoscaling.

- Max instance numbers: Maximum number of instances at autoscaling.

The number of instances can be set between 1 and 64, but the conditions of “minimum number of instances <= number of instances <= maximum number of instances” must be met.

Autoscale Conditions.

- When the average CPU utilization of all running instances exceeds 60%, the number of instances will increase.

- If the average CPU utilization of all instances being launched is below 60%, the number of instances will be reduced.

- Even if you specify the number of instances, the number of instances may be reduced to the minimum number of instances if the average CPU utilization is low after startup.

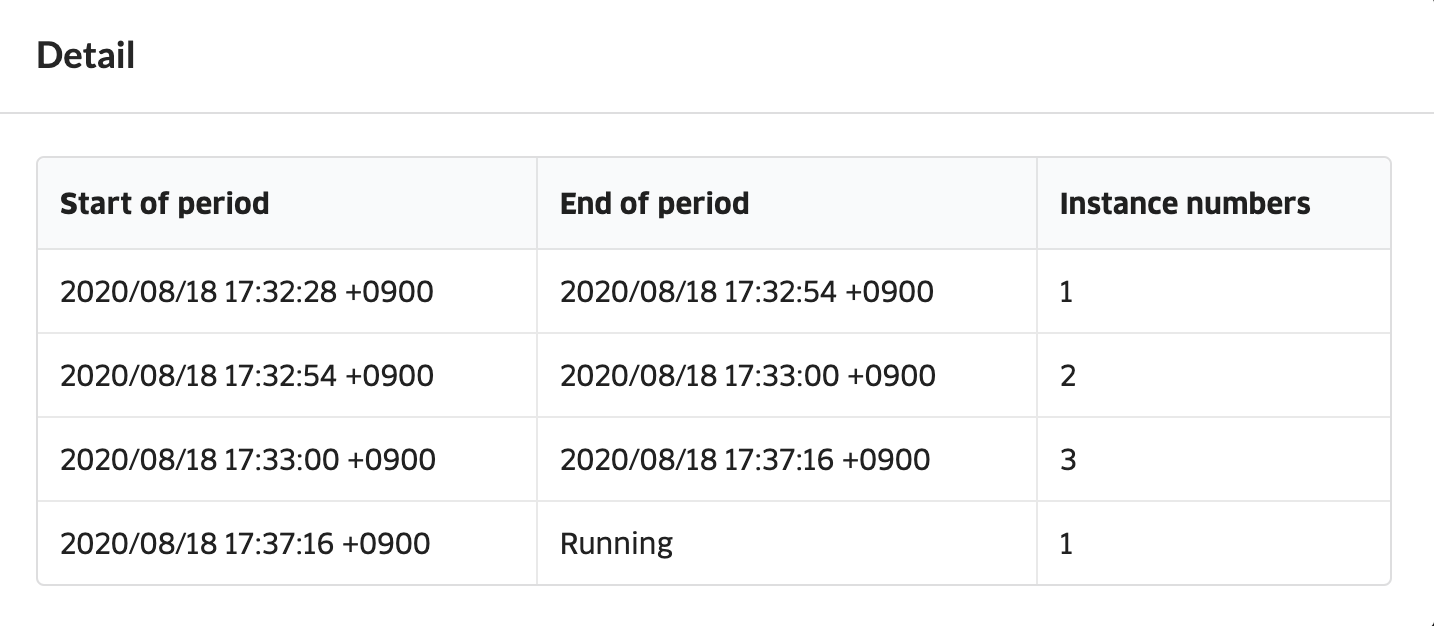

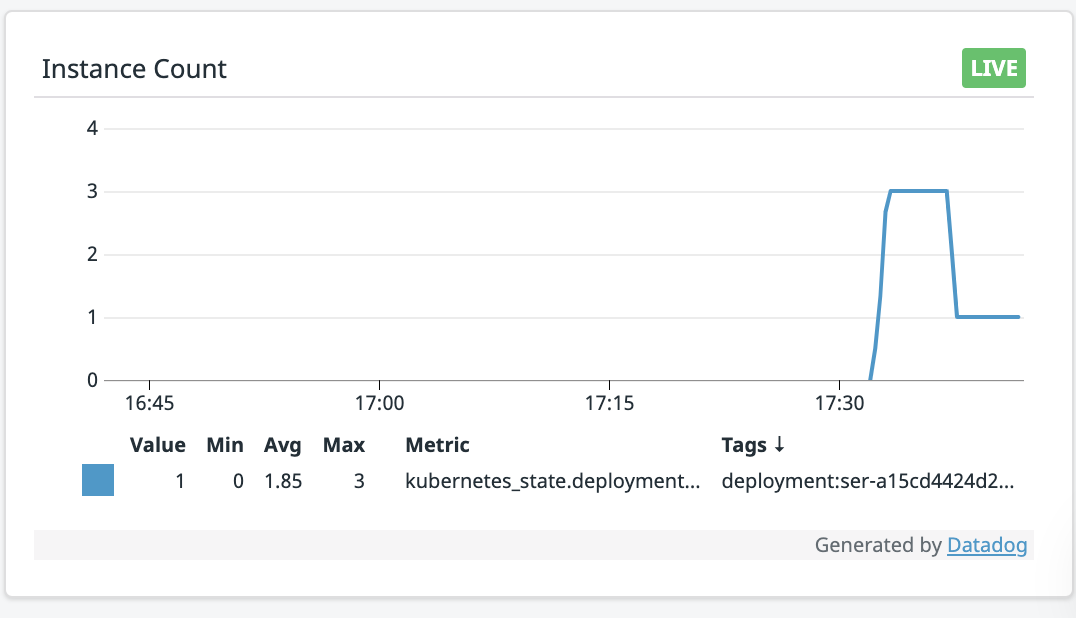

How to check the auto scale status.

You can see this from HTTP service metrics or from resource usage.

HTTP service metrics

Resource usage